There are going to be a lot of interesting cases regarding incidents with self-driving cars and whether or not the car's autopilot features helped or hurt the outcome of the situation, regarding liability and whether or not autonomous cars are a good idea. This recent case about a fatality in Florida isn't one of them.

Before I recap, let me explain what Tesla's Autopilot does: It looks for the lane markers on the road so it can steer the car to follow your current lane. And it looks for cars in front of you so it slows down if they slow down. That's it. (There's a lane change system that needs to be manually invoked, but that's not relevant to this case.)

I can't stress enough how simplistic this system is. It doesn't use mapping data, it doesn't predict or analyze. It doesn't know traffic laws or can read signage or see traffic lights. It doesn't consider other vehicles' brake lights or signals. It simply stays in the lane and slows down for obstacles. The lane detection isn't even particularly great, if you're driving through an intersection you can tell that it's immediately lost because of the lack of markings. The obstacle detection doesn't work if the object is stationary and you're going fast, as seen in this video of a crash.

During our introductory drive where the Tesla personnel showed us all the features, they made that abundantly clear. They let us use Autopilot on a surface road (which you are explicitly told not to do) and cross an intersection - the intersection is in a curve and has no markings, so the car sometimes ends up in a different lane.

In short - Autopilot only works on a freeway where you're simply following a path and have cars approximately the same speed of yours around you.

Now let's look at the crash report of the Florida Highway Police:

Notice something? This is not a freeway. Yes, it is Highway 27, and I guess if you take things literally, yes, the Tesla guys said you can use Autopilot on a Highway, and "Highway 27" sure sounds like a highway. But there is cross traffic. Autopilot cannot handle cross traffic.

Of course, you could get lucky. A Tesla will brake when there's an obstacle in front of you, but that doesn't work reliably if the obstacle is not moving at roughly the same speed and direction you are. In their press release about the fatal crash, Tesla talked about how this obstacle detection didn't see the white truck over the bright white background. But the fact of the matter is that Autopilot was engaged where it should have never been engaged in the first place!

In my opinion, the fault lies on both parties. The truck made a left turn without yielding to oncoming traffic. The Tesla driver used Autopilot where it was not meant to be used and didn't pay attention on top of that.

Autopilot is great. I love it. But it's barely more than a glorified cruise control. On long freeway drives, I can relax more, I don't touch the steering wheel or pedals, and I could theoretically take my eyes off the road, but I've seen it screw up a few times, so I wouldn't close my eyes and let it do its thing, even on a freeway.

But using it on a road with cross traffic? That is borderline suicidal. I guess the driver figured he has right of way and doesn't need to worry about cross traffic. But that would mean having way too much faith in your fellow drivers, which was clearly misplaced here.

Maybe the problem is that the name "Autopilot" sounds too much like self-driving car and people overestimate its capabilities, but the driver had plenty of experience with Autopilot and posted videos about it before, so I believe it simply boils down to the incorrect assumption that other motorists drive defensively and wouldn't cause a dangerous situation due to negligence. Tesla's Autopilot doesn't do nearly enough predictive modeling to handle that.

Tesla could disable autopilot on all roads that are not suitable for Autopilot. It has mapping data, so it knows what kind of road you're on. This is generally the course of action you need to take if the general public isn't capable of using your product correctly. That would suck though - I sometimes use it on surface roads where I know the characteristics of the road provide enough data for Autopilot, and of course I'm more alert than I would be on a freeway. I'd hate to lose that feature because some people don't understand the limitations of the system.

This isn't a new problem - autopilot on planes has been an issue for decades. Even back in the 90s, autopilot was blamed for confusing pilots and causing crashes. A prominent, more recent example is Air France Flight 447. This is the Paradox of Automation - human interaction becomes more critical in a partially automated system.

The optimal solution would presumably be for as many vehicles as possible to be fully automated with zero human intervention. Since this is quite a while out, I'm hoping for this:

See the difference? This is the kind of data you need for a fully autonomous car. If you rely on Tesla's autopilot and stop paying attention to the road, the fault is entirely with you.

Before I recap, let me explain what Tesla's Autopilot does: It looks for the lane markers on the road so it can steer the car to follow your current lane. And it looks for cars in front of you so it slows down if they slow down. That's it. (There's a lane change system that needs to be manually invoked, but that's not relevant to this case.)

I can't stress enough how simplistic this system is. It doesn't use mapping data, it doesn't predict or analyze. It doesn't know traffic laws or can read signage or see traffic lights. It doesn't consider other vehicles' brake lights or signals. It simply stays in the lane and slows down for obstacles. The lane detection isn't even particularly great, if you're driving through an intersection you can tell that it's immediately lost because of the lack of markings. The obstacle detection doesn't work if the object is stationary and you're going fast, as seen in this video of a crash.

During our introductory drive where the Tesla personnel showed us all the features, they made that abundantly clear. They let us use Autopilot on a surface road (which you are explicitly told not to do) and cross an intersection - the intersection is in a curve and has no markings, so the car sometimes ends up in a different lane.

In short - Autopilot only works on a freeway where you're simply following a path and have cars approximately the same speed of yours around you.

Now let's look at the crash report of the Florida Highway Police:

|

| Image credit: Florida Highway Patrol |

Notice something? This is not a freeway. Yes, it is Highway 27, and I guess if you take things literally, yes, the Tesla guys said you can use Autopilot on a Highway, and "Highway 27" sure sounds like a highway. But there is cross traffic. Autopilot cannot handle cross traffic.

Of course, you could get lucky. A Tesla will brake when there's an obstacle in front of you, but that doesn't work reliably if the obstacle is not moving at roughly the same speed and direction you are. In their press release about the fatal crash, Tesla talked about how this obstacle detection didn't see the white truck over the bright white background. But the fact of the matter is that Autopilot was engaged where it should have never been engaged in the first place!

| |

|

In my opinion, the fault lies on both parties. The truck made a left turn without yielding to oncoming traffic. The Tesla driver used Autopilot where it was not meant to be used and didn't pay attention on top of that.

Autopilot is great. I love it. But it's barely more than a glorified cruise control. On long freeway drives, I can relax more, I don't touch the steering wheel or pedals, and I could theoretically take my eyes off the road, but I've seen it screw up a few times, so I wouldn't close my eyes and let it do its thing, even on a freeway.

But using it on a road with cross traffic? That is borderline suicidal. I guess the driver figured he has right of way and doesn't need to worry about cross traffic. But that would mean having way too much faith in your fellow drivers, which was clearly misplaced here.

Maybe the problem is that the name "Autopilot" sounds too much like self-driving car and people overestimate its capabilities, but the driver had plenty of experience with Autopilot and posted videos about it before, so I believe it simply boils down to the incorrect assumption that other motorists drive defensively and wouldn't cause a dangerous situation due to negligence. Tesla's Autopilot doesn't do nearly enough predictive modeling to handle that.

Tesla could disable autopilot on all roads that are not suitable for Autopilot. It has mapping data, so it knows what kind of road you're on. This is generally the course of action you need to take if the general public isn't capable of using your product correctly. That would suck though - I sometimes use it on surface roads where I know the characteristics of the road provide enough data for Autopilot, and of course I'm more alert than I would be on a freeway. I'd hate to lose that feature because some people don't understand the limitations of the system.

This isn't a new problem - autopilot on planes has been an issue for decades. Even back in the 90s, autopilot was blamed for confusing pilots and causing crashes. A prominent, more recent example is Air France Flight 447. This is the Paradox of Automation - human interaction becomes more critical in a partially automated system.

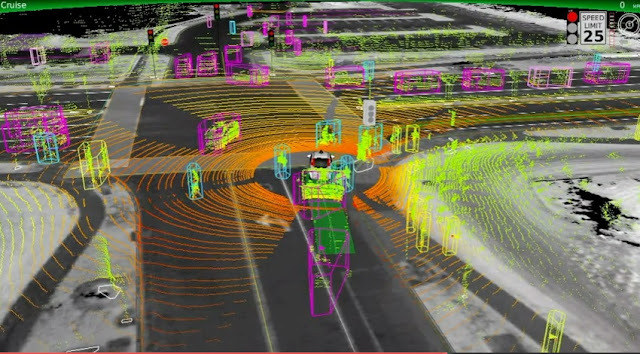

The optimal solution would presumably be for as many vehicles as possible to be fully automated with zero human intervention. Since this is quite a while out, I'm hoping for this:

- As the technology becomes more ubiquitous, drivers will become more familiar with the limitations of current semi-autonomous cars

- Better UX design makes it more obvious what the car can and cannot do

- The technology gradually improves to handle more and more use cases

Just to reiterate the point of Tesla's limited autopilot, let's look at the display:

The car can tell that there's a lane to both the left and the right of the current one, it can tell that the lane is current going straight, it knows the speed limit from the mapping data (although it only uses that to patronize you), it can sense three cars in front... and yeah, that's mostly it. Of course there's more data internally, but what you see here is basically all the information it uses to drive the car.

Now look at a representation of the data Google's self-driving car considers, courtesy of a TED talk by Chris Urmson:

|

| Image credit: Google |

|

| Image credit: Google |

See the difference? This is the kind of data you need for a fully autonomous car. If you rely on Tesla's autopilot and stop paying attention to the road, the fault is entirely with you.

Comments